Effortless CI/CD: Integrating ArgoCD into Kubernetes Workflows

Continuous integration and continuous depaloyment (CI/CD) pipelines have become a cornerstone of efficient software delivery. Kubernetes, with its scalability and flexibility, has been a favorite among engineers for orchestrating containerized applications. However, traditional deployment methods using raw Kubernetes manifests can often become cumbersome and error-prone. This is where GitOps and tools like ArgoCD come to the rescue. Let’s dive into how to integrate ArgoCD into Kubernetes workflows and explore why GitOps is a game-changer for modern CI/CD pipelines.

Traditional Kubernetes Deployment: The Challenges

Traditional Kubernetes deployments involve directly managing manifests (YAML files) using kubectl. While this approach works for small projects, it has several limitations:

- Manual Intervention: Frequent manual updates and application configurations lead to human errors.

- Lack of Version Control: Keeping track of changes to manifests can become complex without a proper system.

- Difficulty in Rollbacks: Reverting to a stable state during failures is not straightforward.

- Complex Multi-Environment Management: Ensuring consistency across dev, staging, and production environments becomes a tedious task.

These challenges make it evident that a more structured and automated approach is required. Enter GitOps.

What is GitOps?

GitOps is a modern approach to CI/CD that uses Git as the single source of truth for application and infrastructure definitions. The main principles of GitOps include:

- Declarative Descriptions: Applications and infrastructure are defined declaratively.

- Version Control: Every change is versioned in Git, providing a clear audit trail.

- Automated Deployments: Tools automatically apply changes described in Git to the Kubernetes cluster.

With GitOps, you get:

- Improved developer productivity.

- Consistent and reliable deployments.

- Enhanced security through traceable changes.

Why ArgoCD for GitOps?

ArgoCD is a powerful GitOps tool designed to manage Kubernetes deployments. It monitors your Git repositories and ensures your Kubernetes clusters are always in sync with the desired state defined in Git. Here are its key features:

- Declarative and Automated: ArgoCD continuously reconciles the cluster state with the Git repository.

- Application Synchronization: It detects drift and allows automated or manual syncing.

- Multi-Cluster Support: Manage applications across multiple Kubernetes clusters.

- Integration with Helm and Kustomize: Simplifies handling complex application configurations.

- Visual Dashboard: Provides an intuitive UI for monitoring application states and performing operations.

Integrating ArgoCD into Kubernetes Workflows

Here’s a step-by-step guide to setting up ArgoCD in your Kubernetes environment:

1. Set Up Kubernetes Cluster

Ensure you have a running Kubernetes cluster. You can use Minikube, kind, or a managed Kubernetes service like GKE, AKS, or EKS.

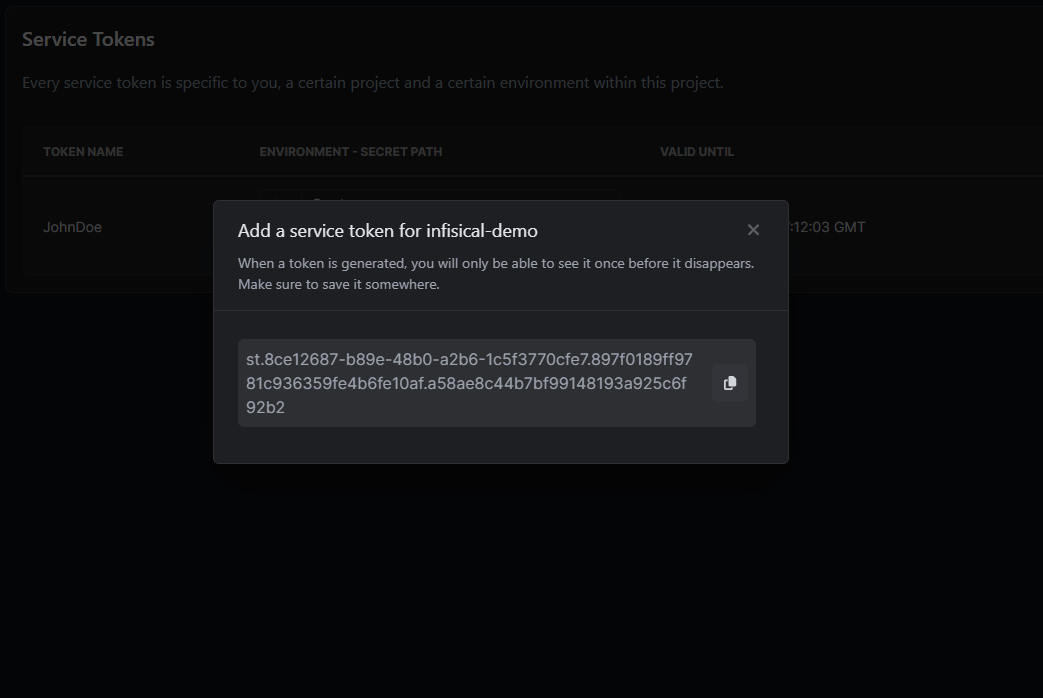

2. Install ArgoCD

You can install ArgoCD using the official Helm chart, which provides flexibility for customizing your installation. Follow these steps:

Install ArgoCD Helm Chart

-

Add the ArgoCD Helm repository:

-

Install ArgoCD in a dedicated namespace (e.g.,

argocd):

Install ArgoCD Image Updater Helm Chart

-

Add the ArgoCD Image Updater Helm repository:

-

Install the Image Updater in the same namespace:

-

Configure the Image Updater by adding the required annotations to your ArgoCD applications for automatic image updates.

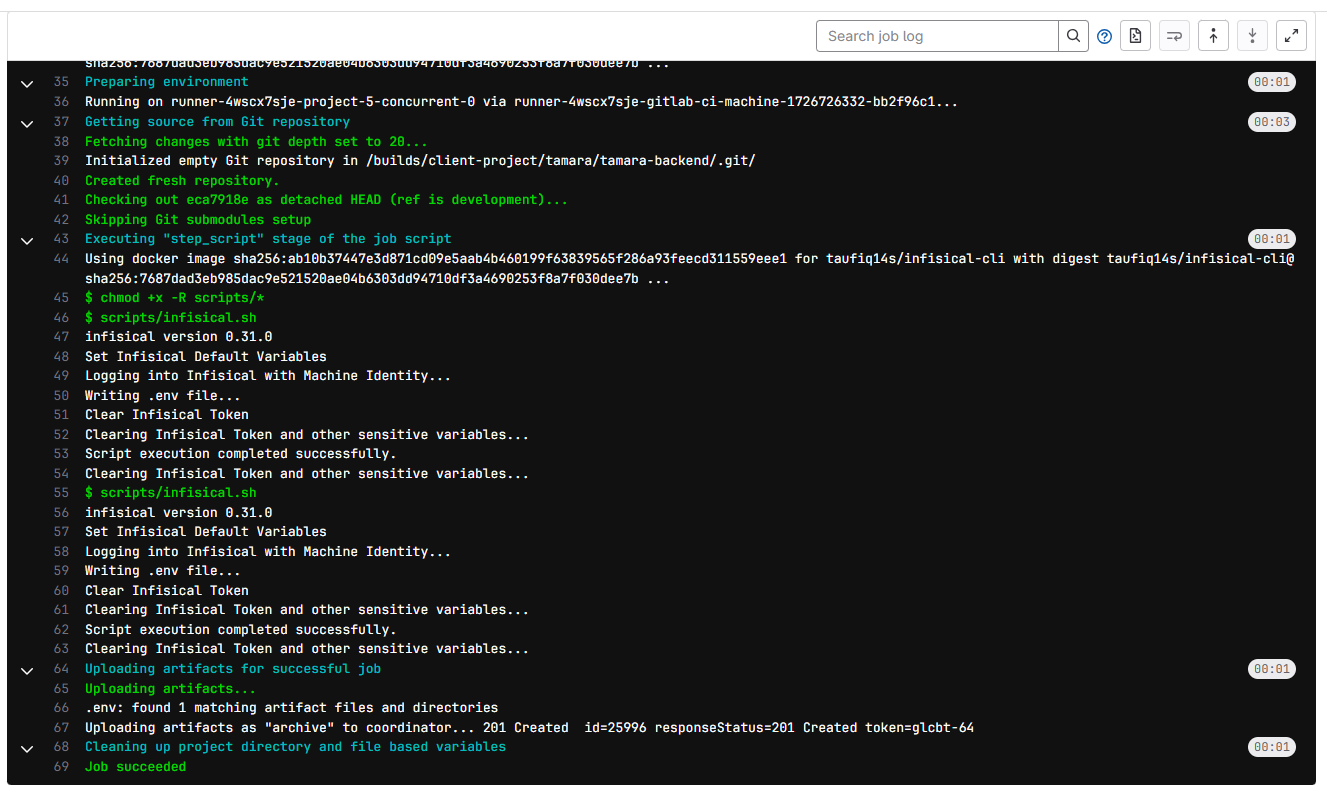

Now you have both ArgoCD and its Image Updater running in your cluster, streamlining deployments and image updates effortlessly. following commands to install ArgoCD:

kubectl create namespace argocd

kubectl apply -n argocd -f https://raw.githubusercontent.com/argoproj/argo-cd/stable/manifests/install.yaml

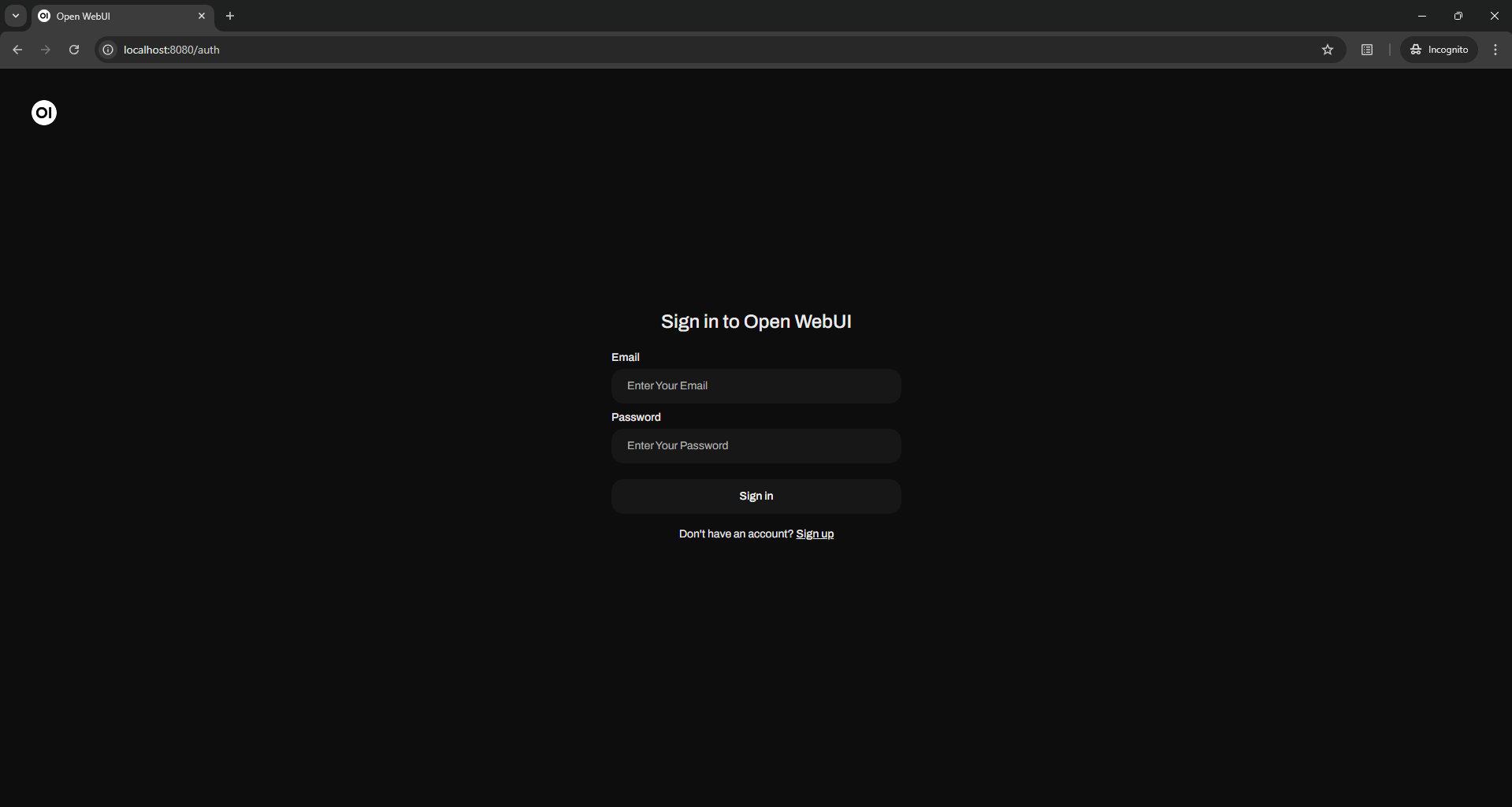

3. Access ArgoCD UI

Expose the ArgoCD server using a LoadBalancer or port-forwarding:

Visit https://localhost:8080 to access the ArgoCD UI.

4. Configure a Git Repository

Push your Kubernetes manifests, Helm charts, or Kustomize configurations to a Git repository. This repository will act as the source of truth for your deployments.

5. Add Applications to ArgoCD

Link your Git repository to ArgoCD using the UI or CLI:

argocd app create my-app \

--repo https://github.com/your-org/your-repo.git \

--path my-app \

--dest-server https://kubernetes.default.svc \

--dest-namespace default

6. Enable Auto-Sync

Set up auto-sync to automatically apply changes pushed to the Git repository:

Tools That Complement ArgoCD

- Docker: For containerizing your applications.

- GitLab CI/CD: Automate building and pushing images to a container registry.

- Helm: Simplify application deployment using Helm charts.

- FluxCD: Another GitOps tool, often used in conjunction with ArgoCD for advanced workflows.

Benefits of Using GitOps Over Traditional Deployments

- Consistency Across Environments: Changes in Git automatically propagate to all environments, ensuring consistency.

- Versioned Rollbacks: Easy rollbacks to previous states by reverting the Git commit.

- Improved Collaboration: Teams can work collaboratively using Git's branching and pull request model.

- Enhanced Security: Changes are traceable, and automated workflows reduce human error.

- Scalability: Manage multiple clusters and environments seamlessly.

Conclusion

Integrating ArgoCD into Kubernetes workflows elevates your CI/CD pipeline by embracing GitOps principles. The combination of automation, traceability, and consistency makes it a must-have for modern DevOps practices. Whether you’re managing a single application or a complex multi-cluster environment, ArgoCD simplifies the process, empowering teams to focus on delivering value rather than grappling with deployment complexities.

Ready to transform your CI/CD workflows? Start your journey with ArgoCD today and experience the power of GitOps!

For the first time you need create a local user by clicking up sign up inside Open WebUI , I took 30 minutes to realize it LOL 😂

For the first time you need create a local user by clicking up sign up inside Open WebUI , I took 30 minutes to realize it LOL 😂